Koala

A dialogue model for research purposes by UC Berkeley

About Koala

UC Berkeley has developed a dialogue model called Koala that is designed for research purposes. The results of the user study showed that Koala can effectively answer a variety of questions posed by users. Additionally, Koala was reported to have results that were at least as good as ChatGPT in half of the cases, and usually better than Stanford's Alpaca model. A web demo is available for public use.

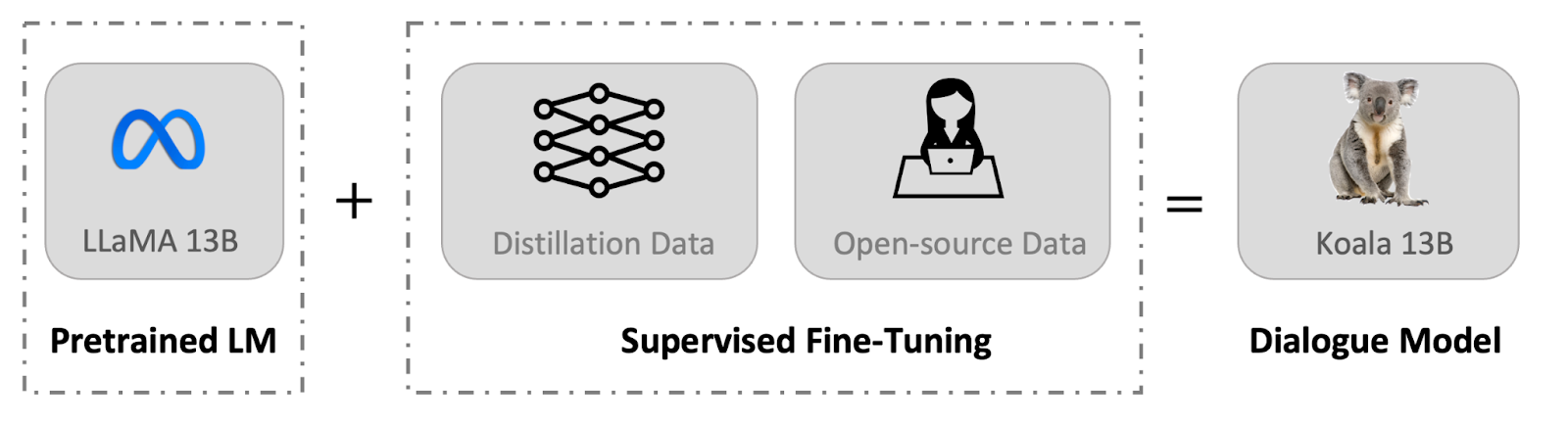

Koala was trained by fine-tuning Meta’s LLaMA on dialogue data scraped from the web, while focusing particularly on responses to queries from other big language models like ChatGPT. The developers chose quality over quantity, and thus, the original dataset of 60,000 dialogues was reduced to around 30,000 after eliminating redundant and non-English dialogues. Examples from ChatGPT and human responses from the HC3 English dataset (87,000 question-answer pairs) were also used.

In addition, open-source data from OIG dataset ANthropic HH dataset, OpenAI WebGPT’s dataset, and OpenAI summarisation dataset were incorporated in Koala's training regimen.

Source: https://analyticsindiamag.com/uc-berkeley-releases-koala-for-research-purposes/

Koala Screenshots

Similar AI-Apps

EA Chat GPT-3

EA Chat GPT-3