M-VADER

A Model for Diffusion with Multimodal Context

About M-VADER

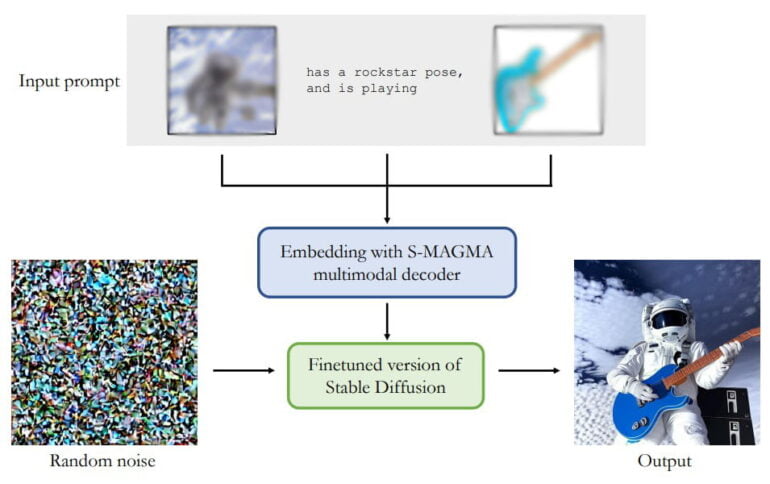

M-VADER is an AI-powered model, developed by Aleph Alpha in conjunction with the Technical University of Darmstadt, which produces pictures based on multiple inputs. Unlike other generative models, such as OpenAIs DALL-E 2, Midjourney, or Stable Diffusion, M-VADER is able to generate new images by combining a photo, sketch or other visual source with a textual description.

M-VADER uses a diffusion model (DM) to create images based on a combination of images, text and other inputs. This model is inspired by successful DM image generation algorithms that enable users to specify an output image with a text prompt. The embedding model S-MAGMA is an important component of M-VADER, which is a 13 billion parameter multimodal decoder combining components from a vision-language model and biases which have been finetuned for semantic search.

M-VADER screenshots

EA Chat GPT-3

EA Chat GPT-3