ClipClap

Image Captioning with Clip Encoder and GPT2

About ClipClap

ClipClap is a revolutionary approach to image captioning that does not require additional information, such as object annotation, to generate captions. Our model is trained quickly and efficiently, and is capable of producing results that are comparable to state-of-the-art models, even on datasets with millions of images. We use a pre-trained CLIP model to generate semantic encodings for images and combine it with a fine-tuned language model to generate a valid caption. An alternative approach involves using a transformer architecture for the mapping network and not using GPT-2 at all. Our light model still manages to achieve results on par with state-of-the-art on the nocaps dataset.

Source: https://github.com/rmokady/CLIP_prefix_caption

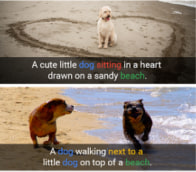

ClipClap screenshots

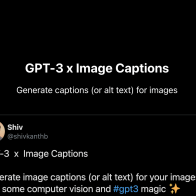

EA Chat GPT-3

EA Chat GPT-3