Cerebras-GPT

A Family of Open, Compute-efficient, Large Language Models

About Cerebras-GPT

Cerebras has released seven GPT-3 models that range from 111 million to 13 billion parameters. These models have been created using the Chinchilla formula, making them more accurate and computationally efficient than ever before.

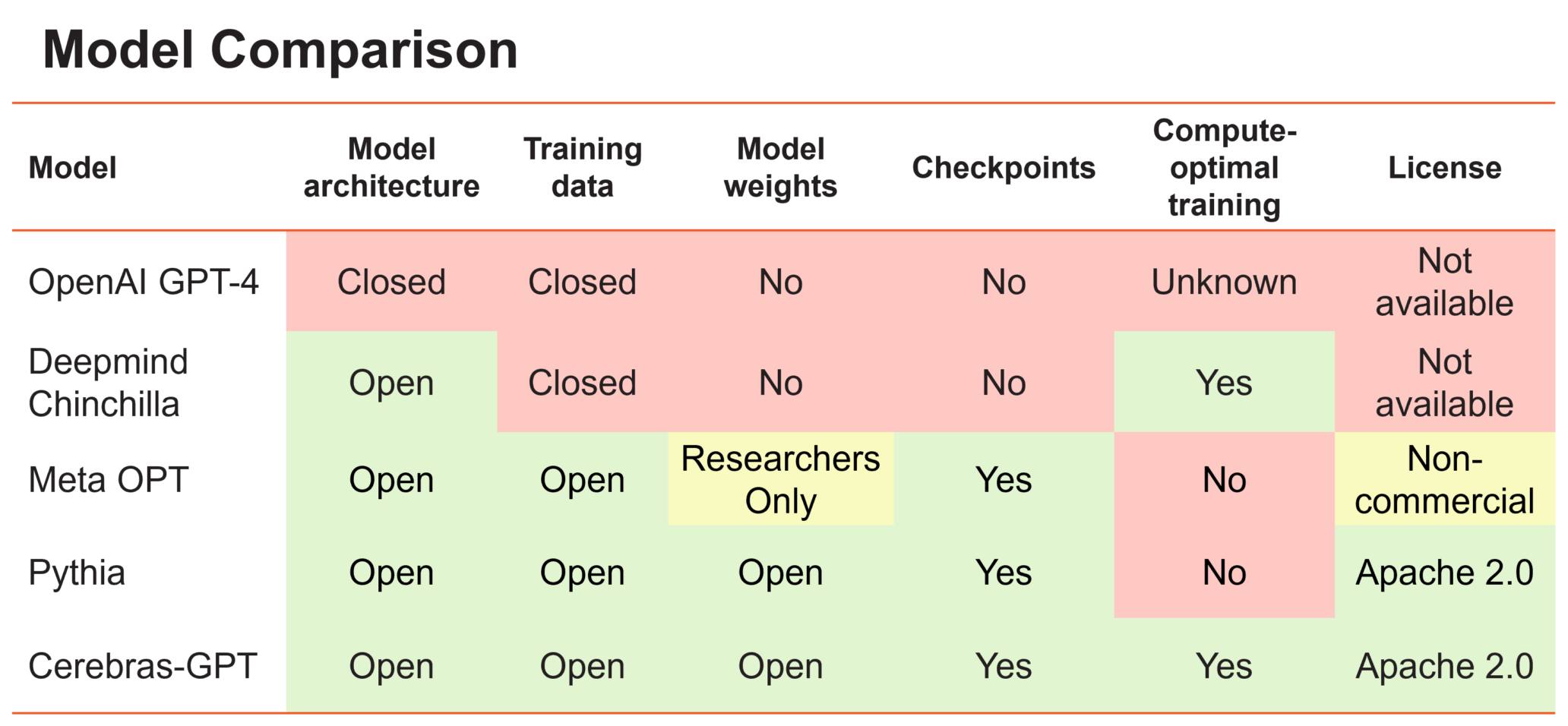

The use of Artificial Intelligence is becoming increasingly restricted, with larger models such as OpenAI's GPT4 being released without any information about the model architecture, training data, hardware used, or hyperparameters.

For LLMs (large language models) to be a truly open technology, it's important to have access to models that are open-source, reproducible, and royalty-free for both research and commercial applications. To this end, Cerebras has created a series of transformer models, called Cerebras-GPT, which are open-source and released with an Apache 2.0 license.

Cerebras has trained GPT-3 using the optimal compute schedule and optimal scaling indicated by Chinchilla and μ-parameterization, outperforming existing GPT-3 clones by a lot. This is the first time μ-parameterization has been used in a production environment. These models have been trained from the ground up, meaning the community no longer has to rely on LLaMA (Large Language Model Analysis).

Cerebras-GPT Screenshots

EA Chat GPT-3

EA Chat GPT-3