AlexaTM 20B

Few-shot learning using a large-scale multilingual seq2seq model

About AlexaTM 20B

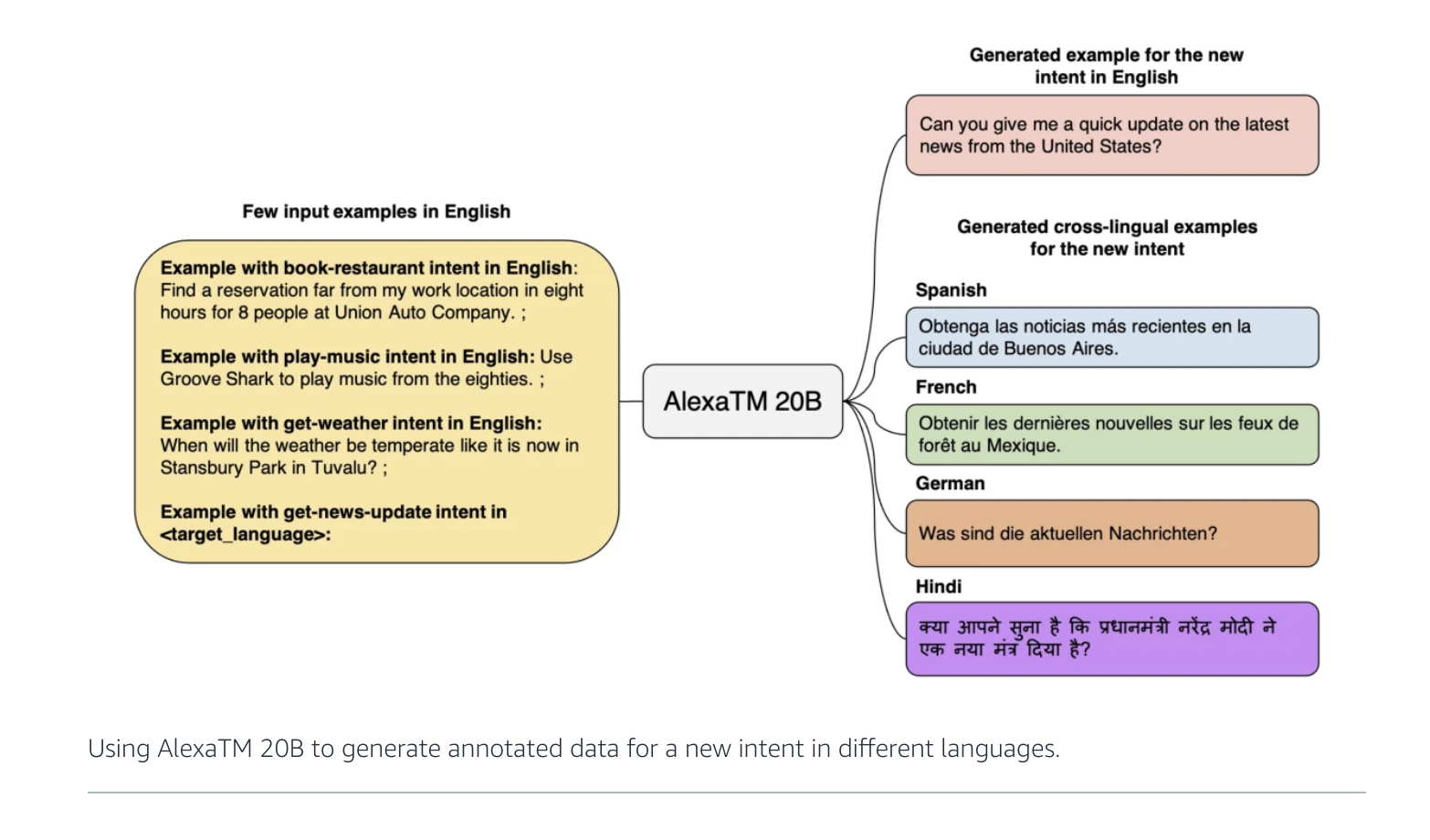

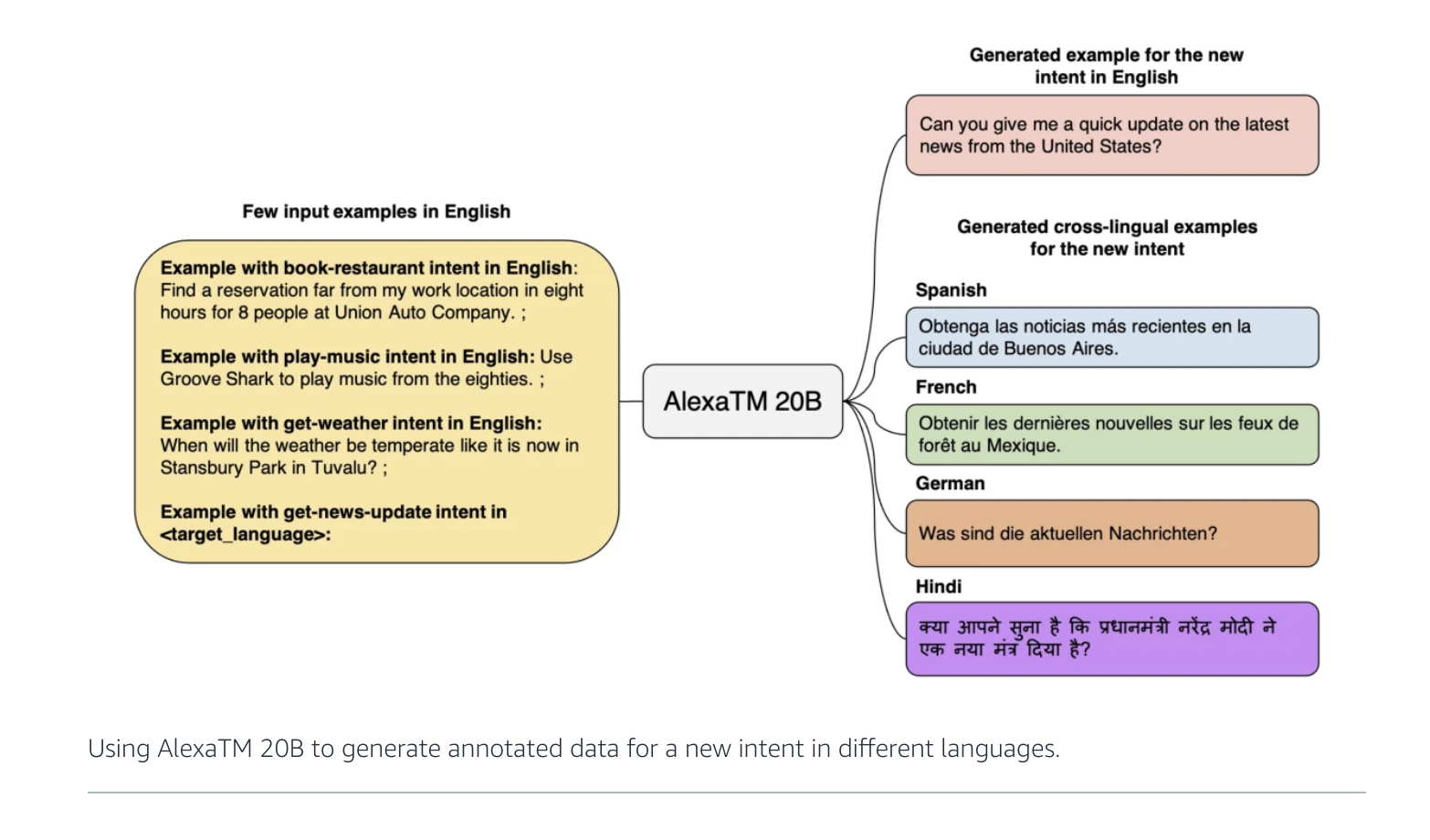

Alexa Teacher Model (AlexaTM 20B) has the capacity to learn from a small amount of data and surpass the performance of a larger-scale PaLM decoder model. It has achieved the best results on one-shot summarization tasks. Additionally, AlexaTM 20B is state-of-the-art in one-shot machine translation for languages such as Arabic, English, French, German, Hindi, Italian, Japanese, Marathi, Portuguese, Spanish, Tamil, and Telugu using the Flores-101 dataset. Amazon Science also shows that AlexaTM 20B is better than GPT3 (175B) on SuperGLUE and SQuADv2 datasets in a zero-shot setting. It also has the highest performance on multilingual tasks such as XNLI, XCOPA, Paws-X, and XWinograd. Overall, this suggests that seq2seq models are a great option for training large-scale language models.

AlexaTM 20B screenshots

EA Chat GPT-3

EA Chat GPT-3